In a previous post, we got the hardware-accelerated IEC serial interface to the point where I could send bytes under attention to connected devices, and perform the talker-to-listener turn-around, using a VHDL simulation of a 1541 and some tools I built to test and debug it. Now its time to get receiving of bytes from the bus working!

Receiving of bytes using the "slow" protocol is fairly easy: Wait for CLK to go to 5V to indicate the sender is ready, then release DATA to 5V to indicate that we are ready to receive a byte. Then 8 bits will be sent that should be latched on the rising-edge of CLK pulses. Once all 8 bits are received, the sender drives CLK low one more time, after which the receiver should drive the DATA line to 0V to indicate acknowledgement of the byte -- thus getting us back to where we started, i.e., ready to receive the next byte.

The C128 fast serial protocol uses the SRQ line instead of CLK to clock the data bits, and drives the data bits at a much faster rate. Bits are accepted on the rising edge of the SRQ, but the bit order is reversed compared to the normal protocol. Which protocol is being used can be determined by whether CLK or SRQ pulses first. We'll start for now with just the slow protocol, because that's all our VHDL 1541 does. Likewise, we'll worry about the JiffyDOS protocol a bit later.

Okay, so I have quickly implemented the receiving of bytes using both the slow and C128 fast protocol -- although I can't easily test the latter just yet. Remarkably, the only bug in the first go, was that I had the bit orders all reversed.

I've also refactored the test driver, so that now my test looks something like this:

elsif run("Read from Error Channel (15) of VHDL 1541 device succeeds") then

-- Send $48, $6F under ATN, then do turn-around to listen, and receive

-- 73,... status message from the drive.

boot_1541;

report "IEC: Commencing sending DEVICE 11 TALK ($4B) byte under ATN";

atn_tx_byte(x"4B"); -- Device 11 TALK

report "IEC: Commencing sending SECONDARY ADDRESS 15 byte under ATN";

atn_tx_byte(x"6F");

report "IEC: Commencing turn-around to listen";

tx_to_rx_turnaround;

report "IEC: Trying to receive a byte";

-- Check for first 4 bytes of "73,CBM DOS..." message

iec_rx(x"37");

iec_rx(x"33");

iec_rx(x"2c");

iec_rx(x"43");

I'm liking how clear and consise the test definition is. It's also nice to see this passing, including reading those 4 bytes! Actually, the simplicity of this test definition speaks to the simplicity of the interface that I am creating for this: Each of those test functions really just writes to a couple of registers in the IEC controller, and then checks the status register for result. In other words, these tests can be easily adapted to run on the real-hardware when done.

So anyway, we now have TX under attention, turn-around and RX working. The next missing piece is TX without attention, which should in theory be quite similar to TX under attention. The key difference is that TX without attention is allowed to use FAST and JiffyDOS protocols. This means it probably needs a separate implementation. Again, I'll start with just the slow protocol. In terms of what to test, I'm thinking I'll send a command to the 1541 to do something, and then try to read the updated status message back. I'll go with the "UI-" command, that sets the faster VIC-20 timing of the bus protocol, since it doesn't need a disk in the drive.

Hmm, the UI- command is implemented using a horrible, horrible hack in the 1541 ROM by the look of things: Take a look at $FF01 in https://g3sl.github.io/c1541rom.html and you will see that they basically put a shim routine in the NMI handler for the CPU that checks for the - sign of the UI- command. I've not dived deep enough into the 1541's internals to know why they are even using an NMI to dispatch user commands. Ah -- actually it is that the UI command just jumps to the NMI vector. So they just hacked in the + / - check. That's an, er, "efficient" solution, I suppose.

I guess in a way, it doesn't matter, provided we know that it gets executed, which we can do by watching if the PC reaches the routine at $FF01, and in particular at $FF0D where the C64/VIC20 speed flag is written to location $23.

Well, as easy as the IEC RX was, the IEC TX without ATN is proving to be a bit of a pain. Or at least my general bus control doing more complex things. Specifically, I have written another test that tries to issue the UI- command, but it fails for some reason. This is what I am doing in the test:

-- Send LISTEN to device 11, channel 15, send the "UI-" command, then

-- Send TALK to device 11, channel 15, and read back 00,OK,00,00 message

boot_1541;

report "IEC: Commencing sending DEVICE 11 LISTEN ($2B) byte under ATN";

atn_tx_byte(x"2B"); -- Device 11 LISTEN

report "IEC: Commencing sending SECONDARY ADDRESS 15 byte under ATN";

atn_tx_byte(x"6F");

report "IEC: Sending UI- command";

iec_tx(x"55"); -- U

iec_tx(x"49"); -- I

iec_tx(x"2D"); -- +

report "IEC: Sending UNLISTEN to device 11";

atn_tx_byte(x"3F");

report "Clearing ATN";

atn_release;

report "IEC: Request read command channel 15 of device 11";

atn_tx_byte(x"4b");

atn_tx_byte(x"6f");

report "IEC: Commencing turn-around to listen";

tx_to_rx_turnaround;

report "IEC: Trying to receive a byte";

-- Check for first 4 bytes of "00,OK..." message

iec_rx(x"30");

iec_rx(x"30");

iec_rx(x"2c");

iec_rx(x"4F");

It gets to the ATN release, and then ATN doesn't release. It looks like something wonky is going on with the IEC command dispatch stuff, because in the atn_release procedure it first does a command $00 to halt any existing command, and then a $41 to release ATN to 5V. It does the $00 command, but not the $41:

e): IEC: Release ATN line and abort any command in progress

: IEC: REG: register write: $00 -> reg $8

: IEC: REG: register write: $41 -> reg $8

IEC: Command Dispatch: $00

e): IEC: Request read command channel 15 of device 11

: IEC: REG: register write: $4B -> reg $9

It looks like I don't wait long enough after issuing the $00 command, before issuing the $41 command. Adding a delay now gets it further, and it tries to read the command channel status back, but still sees the 73, CBM DOS message there, not a 00, OK. So I need to look at what is going on there. Do I need to put an EOI on the last byte of the command? Do I have a bug in my 6502 CPU for the 1541? Is it something else? I'll have to investigate another day, as its bed time now.

Found one problem: You can't do $6F if you then want to write to the command channel. Instead you have to do $FF to open the command channel. With that, I am now getting the problem that the drive is not acknowledging the $FF byte. No idea why yet, but it's something very specific to investigate, which makes our life much easier.

Digging through the 1541 ROM disassembly I found that indeed the DOS commands do have to be terminated with an EOI, so I will need to implement EOI next. But for some reason I'm not getting a DEVICE NOT PRESENT when sending the $FF secondary address. Sending $48 to talk instead works fine, though.

Well, I had the ACK sense of the data line inverted in my check. How that was even working before, I have no idea, other than my very fast hardware implementation was able to think the byte was being ACK'd before the 1541 ROM had time to pull DATA low. That is, the famous charts on pages 364-365 of the Programmer's reference guide erroneously claim T_NE has no minimum, when in fact it does.

The next problem is that the 1541 is missing a bit or two in the byte being sent as part of the DOS command. This seems to rather ironically be because the sender is sending the bits slightly slower than it would like, which causes the 1541 ROM to have to loop back to check for the bit again.

More carefully reading the timing specifications, I am still being a bit too fast: It should be 70usec with CLK low, and 20 with CLK high for each bit. I was using 35 + 35. I'm a little sceptical that 70+20 will work, as I'm fairly sure that more like 35 usec is required with CLK high. But we will see. It might just be that this combination causes the 1541 loops to be in the right place, for it to work.

Well, it does seem to work. So that's a plus.

Now I am back to reading error code 73,CBM DOS... instead of 00, OK,00,00, because the DOS command is not being executed. As already mentioned, this is partly at least because I need to implement EOI. But I'm also seeing the 1541 seems to not think that the command channel is available when processing the bytes received:

: IEC: REG: register write: $55 -> reg $9

: IEC: REG: register write: $31 -> reg $8

IEC: Command Dispatch: $31

iec_state = 400

+2702.663 : ATN=1, DATA=0(DRIVE), CLK=0(C64)

iec_state = 401

+19.062 : ATN=1, DATA=0(DRIVE), CLK=1

$EA2E 1541: LISTEN (Starting to receive a byte)

$EA44 1541: LISTEN OPEN (abort listening, due to lack of active channel)

$E9C9 1541: ACPTR (Serial bus receive byte)

$E9CD 1541: ACP00A (wait for CLK to go to 5V)

Now, I don't know if that's supposed to happen, and it's just the lack of EOI stuffing things up, or whether it's a real problem. And I won't know until I implement EOI, so let's do that before going any further. For EOI, instead of holding T_NE for 40 usec, we hold it for 200usec, and expect to see the drive pull DATA low for ~60usec to acknowledge the EOI.

I have that implemented now, but the 1541 is just timing out, without acking the EOI. It looks like the 6522 VIA timer that the 1541 uses to indicate this situation isn't ticking. It looks like my boot speed optimisations have probably prevented something that needs to happen: It looks like the code at $FB20 is what enables timer 1. But that seems to be in the disk format routine, so I don't think that's right. Maybe the 6522 is supposed to power on with Timer 1 already in free-running mode? It's a mystery that will have to wait until after I have slept...

Ok, nothing like a nights sleep to nut over a problem. At $ED9A the high byte of timer 1 gets written to, which should set the timer running in one-shot mode. But it never counts down. That's the cause of the EOI timeout not being detected. Digging into the VIA 6522 implementation I found the problem: When I had modified it to run at 40.5MHz internally, I messed up the cycle phase advance logic. This meant that it never got to the point of decrementing the timer 1 value, and thus the problem we have been experiencing. There were also some other problems with the shift to 40.5MHz, that were preventing writes to the registers of the 6522.

With all that fixed, I can see the timer 1 counting down during the EOI transmission, but when it reaches zero, the interrupt flag for the timer is not being asserted, and thus the 1541 never realises that the timer has expired.

Ok, found and fixed a variety of bugs that were causing that, including in my IEC controller, as well as in the 40MHz operation of the VIA 6522. With that done, the EOI byte is now sent, and it looks like it is correctly recognised. I now need to check:

1. Does the 1541 really process the EOI?

2. Why does the 6502 in the 1541 get stuck in an infinite IRQ loop when ATN is asserted the next time? (given the first time ATN was asserted, there was no such problem)

Hmm... Well, I did something that fixed that. Now it's actually trying to parse the DOS command, which is a great step forward :) It's failing, though, because its now hitting some opcodes I haven't implemented yet in the CPU, specifically JMP ($nnnn). That won't be hard to implement. And indeed it wasn't. So where are we up to now?

The command to get the device to read out the command channel after sending the command fails with device not present. This seems to be because the 1541 is still busy processing the DOS command. The controller has no way to detect this, so far as I can tell. The simple solution is to make sure that we allow enough time for the DOS command to be processed.

After allowing some more time, and fixing a few other minor things, including in the test, the test now passes. Here's the final test script. See if you can spot the errors I picked up in the test definition:

elsif run("Write to and read from Command Channel (15) of VHDL 1541 device succeeds") then

-- Send LIST

EN to device 11, channel 15, send the "UI-" command, then

-- Send TALK to device 11, channel 15, and read back 00,OK,00,00 message

boot_1541;

report "IEC: Commencing sending DEVICE 11 LISTEN ($2B) byte under ATN";

atn_tx_byte(x"2B"); -- Device 11 LISTEN

report "IEC: Commencing sending OPEN SECONDARY ADDRESS 15 byte under ATN";

atn_tx_byte(x"FF"); -- Some documentation claims $FF should be used

-- here, but that yields device not present on the

-- VHDL 1541 for some reason? $6F seems to work, though?

report "Clearing ATN";

atn_release;

report "IEC: Sending UI- command";

iec_tx(x"55"); -- U

iec_tx(x"49"); -- I

iec_tx_eoi(x"2D"); -- +

report "IEC: Sending UNLISTEN to device 11";

atn_tx_byte(x"3F");

report "Clearing ATN";

atn_release;

-- Processing the command takes quite a while, because we have to do

-- that whole computationally expensive retrieval of error message text

-- from tokens thing.

report "IEC: Allow 1541 time to process the UI+ command.";

for i in 1 to 300000 loop

clock_tick;

end loop;

report "IEC: Request read command channel 15 of device 11";

atn_tx_byte(x"4b");

atn_tx_byte(x"6f");

report "IEC: Commencing turn-around to listen";

tx_to_rx_turnaround;

report "IEC: Trying to receive a byte";

-- Check for first 5 bytes of "00, OK..." message

iec_rx(x"30");

iec_rx(x"30");

iec_rx(x"2C");

iec_rx(x"20");

iec_rx(x"4F");

This is quite a satisfying achievement, as it is a complete plausible IEC transaction.

Okay, now that we have a lot of it working, it's time to implement detection of EOI, and then we have the complete slow serial protocol implemented, so far as I can tell, and I can synthesise a bitstream incorporating it, and see if I can talk to a real 1541 with it, before I move on to completing and testing the JiffyDOS and C128 fast serial variants of the protocol.

The EOI detection ended up being a bit fiddly, partly because the IEC timing diagrams don't indicate which side of a communication is pulling lines low, so I confused myself a couple of times. But it is the _receiver_ of an EOI who has to acknowledge it by pulsing the DATA line low. Once I had that in place, it was fine. The test does now take about 5 minutes to run, because I have to simulate several milli-seconds of run-time to go through the whole process that culminates in receiving a character with EOI. In the process, I did also make the reception of non-EOI bytes explicitly check that no EOI is indicated in that case.

The next step is to test the bitstream. I did a quick initial test, but it didn't talk to my 1541. Also, my Pi1541 I think has a blown 7406 that I need to replace. I think I have spares in the shed. I'm keen to measure the voltage directly at the IEC connector when setting and clearing the various signals, to make sure that it is behaving correctly. I might finally get around to making an IEC cable that has open wiring at one end, so that I can easily connect it to the oscilloscope.

A quick probe with the oscilloscope and a simple BASIC program that POKEs values to $D698 to toggle the CLK, DATA and ATN lines shows no sign of life on the pins. So I'm guessing that my plumbing has a problem somewhere. So let's trace it through.

The iec_serial.vhdl module has output signals called iec_clk_en_n, iec_data_en_n and iec_atn. Those go low when the corresponding IEC lines should be pulled low. We know those work, because our VHDL test framework confirmed this.

The iec_serial module is instantiated in iomapper, where those pins are assigned as follow:

iec_clk_en_n => iec_hwa_clk,

iec_data_en_n => iec_hwa_data,

iec_srq_en_n => iec_hwa_srq,

The 2nd CIA that has the existing IEC interface then has the following pin assignments:

-- CIA port a (VIC-II bank select + IEC serial port)

portain(2 downto 0) => (others => '1'),

portain(3) => iec_atn_reflect, -- IEC serial ATN

-- We reflect the output values for CLK and DATA straight back in,

-- as they are needed to be visible for the DOS routines to work.

portain(4) => iec_clk_reflect,

portain(5) => iec_data_reflect,

portain(6) => iec_clk_external,

portain(7) => iec_data_external,

portaout(2) => dummy(2),

portaout(1 downto 0) => dd00_bits_out,

portaout(3) => iec_atn_fromcia,

portaout(4) => iec_clk_fromcia,

portaout(5) => iec_data_fromcia,

portaout(7 downto 6) => dummy(4 downto 3),

portaddr(3 downto 2) => dummy(8 downto 7),

portaddr(4) => iec_clk_en,

portaddr(5) => iec_data_en,

portaddr(7 downto 6) => dummy(10 downto 9),

portaddr(1 downto 0) => dd00_bits_ddr,

spin => iec_srq_external,

spout => iec_srq_o,

sp_ddr => iec_srq_en,

countin => '1'

This is then all tied to the output of iomapper.vhdl using the following:

iec_clk_reflect <= iec_clk_fromcia and (not iec_hwa_clk);

iec_data_reflect <= iec_data_fromcia and (not iec_hwa_data);

iec_atn_reflect <= iec_atn_fromcia and iec_hwa_atn;

iec_clk_o <= iec_clk_fromcia and (not iec_hwa_clk);

iec_data_o <= iec_data_fromcia and (not iec_hwa_data);

iec_atn_o <= iec_atn_fromcia and iec_hwa_atn;

This is kind of doubled-up, because we have the reflected internally readable signals with _reflect suffixes, as well as the signals actually being output on the pins with _o suffixes.

Where it gets a bit crazy, is that the inputs and outputs are reversed on the CIA to the IEC port, because they go through either invert buffers on the input side, or through open-collector inverters on the output side -- and both are joined together, as can be seen in the following snippet of the C64 schematic:

In any case, the input side comes in via iec_clk_external and friends. Those don't come into the current situation, because we are just trying to debug the setting of IEC port lines at the moment.

So let's move up to machine.vhdl that instantiates iomapper.vhdl, that really just passes the signals up to the next level, that is instantiated by the top-level file for each target, e.g., mega65r4.vhdl or mega65r3.vhdl.In those files, iec_clk_o is connected to iec_clk_o_drive, and so on. This gives the FPGA an extra 25ns clock tick to propagate the signals around.

Because the MEGA65 uses active-low open-collector output buffers for the IEC port, we have both an enable and a signal level we can set. If you set the enable to low, whatever is on the signal level will get pushed out onto the pin. Otherwise it is tri-stated, i.e., now pushing a signal out. So we have the following code:

-- The active-high EN lines enable the IEC output drivers.

-- We need to invert the signal, so that if a signal from CIA

-- is high, we drive the IEC pin low. Else we let the line

-- float high. We have external pull-ups, so shouldn't use them

-- in the FPGA. This also means we can leave the input line to

-- the output drivers set a 0, as we never "send" a 1 -- only relax

-- and let it float to 1.

iec_srq_o <= '0';

iec_clk_o <= '0';

iec_data_o <= '0';

-- Finally, because we have the output value of 0 hard-wired

-- on the output drivers, we need only gate the EN line.

-- But we only do this if the DDR is set to output

iec_srq_en <= not (iec_srq_o_drive and iec_srq_en_drive);

iec_clk_en <= not (iec_clk_o_drive and iec_clk_en_drive);

iec_data_en <= not (iec_data_o_drive and iec_data_en_drive);

That is, by keeping the selected signal set to 0, we can pull each IEC line low by setting the corresponding _en signal low.

Now, as mentioned earlier, the CIA sees these lines inverted. Thus we have to invert them here with those 'not' keywords. This is also why we invert the iec_serial signal select lines, because they are inverted for a second time here at the top level.

Anyway, all that plumbing looks fairly reasonable. I did see that the SRQ line was not correctly plumbed, which I have fixed.

However one subtle point is important here: The logic that takes the iec_serial outputs only uses the CIA to select the pin directions. This means that if the CIA has the CLK or DATA pins set to input, that the iec_serial logic can't drive the physical pins.

That shouldn't be an issue, though, because the CIAs are normally set to the correct directions on boot. But it's also the most likely problem in the setup that I can think of right now. It can of course be fairly easily checked. I can also try using the CIA to control the IO lines, and see whether that's working, or if there is some sign that I have borked something else up, e.g., in the FPGA pin mappings.

So, trying to toggle the lines using the CIA, I can make DATA and ATN toggle, but not CLK. I've just double-checked on the schematic to make sure that the FPGA pins for the CLK line are set correctly, and they are. The CLK line stays at +5V, so I assume that the enable line for output to the CLK pin is not being pulled low when it should, since the actual value on the signal is tied to 0 in the FPGA.Hmm... I can't see any problem with that plumbing. This makes me worry a bit that the CLK output driver on my R4 board might be cactus. I should be able to test this fairly easily by making a trivial bitstream that just toggles it, or finding another test bitstream that can do that for me, without me having to resynthesise it.

It's possible that this line is borked. Sometimes I can switch the output driver on and off, but other times it resists. Interestingly, when I rerun the bitstream, it starts with CLK low, and I can then change the _o and _en lines, and make it go high. It's the getting it to go low again that is the problem.

I can toggle the iec_clk_en line, if I haven't touched the iec_clk_o line first, and it seems to switch reliably. Hmm.. Made a new bitstream with iec_clk_o tied low, and the switching using iec_clk_en works fine -- which is actually what the full MEGA65 bitstream is doing. So it's all a bit weird.

I'm now just going to

go through all the IEC line plumbing like a pack of epsom salts, and

make sure everything is consistent. I'm going to make all the signals

match the expected voltages on the line, i.e., binary 0 will cause 0V,

and binary 1 will cause release to pull-up to 5V, and generally simplify

everything as much as I can.

Okay, with all that done, I can use the Pi1541, so all the IEC lines are working. This also confirms that the addition of the iec_serial module has not interfered with the operation of the IEC bus.

So let's now try setting and clearing the various lines via the iec_serial.vhdl interface.

The DATA line can be controlled, and if asserted via iec_serial.vhdl, then the normal IEC interface is non-responsive until it is released -- as it should be. The same goes for the ATN line and the CLK line! So it looks like we should be good to try the hardware accelerated IEC communications.

... except that it doesn't want to talk to the Pi1541 at least, and I can't get it to report DEVICE NOT PRESENT if nothing is connected. So something is going wrong still. The CLK and DATA lines can be read correctly by iec_serial.vhdl, which I have confirmed by using the ability to read those signals from the status byte at $D698 of the iec_serial module.

So what on earth is going on?

The Pi1541 is handy in that it has an HDMI output that shows the ATN, DATA and CLK lines in a continuous graph. Unfortunately it doesn't have high enough time resolution to resolve the transmission of individual characters or bits.

Trying now with the real 1541. In the process I realised I had a silly error in my test program, which after fixing, I can now correctly detect DEVICE NOT PRESENT, and also the opposite, when the device number matches the present device. However it hangs during the IEC turn-around sequence. This now works identically with the 1541 and the Pi1541. So why does it get stuck in the IEC turn-around sequence?

The automated tests for the turn-around still pass, so why isn't it passing in real life?

My gut feeling is that the CLK or DATA line is somehow not being correctly read by the iec_serial module when plumbed into the MEGA65 core. Turnaround requires reading the CLK line. It looks like the CLK line never gets asserted to 0V by the drive for some reason.

This is all very weird, as for the 1541 at least, the simulation is using exactly the same ROM etc. Perhaps the turn-around is marginally too fast, and the 20usec intervals are not being noticed, especially if there is a bit of drift in the clock on master or slave. It's easy to increase that 20usec to, say, 100usec, and try it out again after resynthesising.

Ok, that gets us a step further: The turnaround from talker to listener now completes. However, reading the bytes from the DOS status still times out. Now, this could be caused by the Pi1541 supporting either JiffyDOS or C128 fast serial, and thus mistakenly trying to use one of those when sending the bytes back, as we don't have those implemented on byte receive yet. If that's the case, it should be possible to talk to my physical 1541, which supports neither.

Well, the physical 1541 still hangs at the turn-around to listen. How annoying. I'll have to hook up the oscilloscope to this somehow, and probe the lines while it is doing stuff, to see what's going on. Oh how nice it would be to be able to easily probe the PC of the CPU in the real 1541 :) If I had a 16-channel data logger, I could in theory put probes on the address lines of the CPU, but I don't, and it really shouldn't be necessary. Just probing CLK and DATA on the oscilloscope should really be sufficient. So I'll have to make that half-IEC cable, so that I can just have the oscilloscope probes super easily connected.

I went and bought the parts for that, and then realised that it would be better to just make a VHDL data-logger. The idea would be to log all the IEC signal states, and to save BRAM space for logging them, log how many cycles between each change. This would let us log over quite long time periods using only a small amount of BRAM. Using 32 bit BRAM, we should be able to record the input state of CLK, DATA and SRQ, and the output state of CLK, DATA, SRQ, ATN and RESET. That's 8 bits. Then we can add a 24-bit time step from the last change, measured in clock cycles. At 40.5MHz, that's up to 414ms per record. This means we can potentially record 1024 records in a 4KB BRAM covering up to 423 seconds, or about 7 minutes. Of course, if the lines are waggling during a test, then it will be much less than this. But its clearly long enough.

I'll implement this in iec_serial.vhdl, with a special command mode telling it to reset the log buffer, and then another special command that tells it to play out the 4KB buffer. I can rework the existing debug logger I had made, that just logged 8 bits per event, at ~1usec intervals, thus allowing logging of about 4ms. I can just make it so that it builds the 32 bit value to write, and commits it over 4 clock cycles, so that I don't need to change the BRAM to an asymmetric interface with 32 bit writes and 8 bit writes, although that's quite possible to do.

Ok, I have implemented this, let's see if it works. IEC accelerator commands $D0 clears the signal log, and effectively sets it going again, $D1 resets the reading to the start of the log, and $D2 reads the next byte from the signal log, making it visible on the IEC data register... Assuming it works :) First step is check if it synthesises, and I'll probably work on testing under simulation at the same time.

At this point, it would be really nice if the new FPGA build server were ready, to cut the synthesis time down from 45 -- 60 minutes, down to hopefully more like 10 minutes. But as yet, I don't have a delivery date. Hopefully Scorptec will give me an update in the coming days. The only progress I have on that front so far, is that I separately purchased some thermal transfer paste for the CPU, that I realised I hadn't included in the original order.

It's now a few weeks later, the new FPGA build machine has arrived, and cuts synthesis down to around 12 -- 15 minutes, which I've been using in the meantime to fix some other bugs. So that's great.

Now to tackle the remaining work for this, beginning with repairing my Pi1541, which I am pretty sure has a blown open-collector inverter chip. It can use a 74LS05, 74LS06, 74LS16 or 7405 or 7406. The trick is whether I have any spares on hand, it being the Christmas holidays and all, and thus all the shops will be shut.

Fortunately I do still have a coupe on hand. In fact, what I found first is a ceramic packaged 7405 with manufacture date of week 5 of 1975!

Hopefully that brings it back to life again.

Unfortunately not, it seems. Not yet sure if it is the 48 year old replacement IC that is the problem, or something else.

I think in any case, that I will go back to my previous idea for testing, which is to stick with the VHDL 1541, which I have working with stock ROM, and then try that with a JiffyDOS ROM, and make sure JiffyDOS gets detected, and that it all works properly. Then I can do a VHDL 1581 with and without JiffyDOS, to test the fast serial mode, as well as its interaction with JiffyDOS, to make sure that it works with all of those combinations. In theory, that should cover the four combinations of communications protocol adequately, and give me more time to think about real hardware for testing.

I also need to separate all my changes out into a separate feature branch, so that it can be more easily merged in to the mainline tree later. That's probably actually the sensible starting point, in fact.

Ok, I now have the 736-hardware-iec-accel branch more or less separated out. I've confirmed that the IEC bus still works under software control, so that's good.

Next step is to make sure that the hardware-accelerated IEC interface is also still working. I have my test program that tries to read the start of the 1541 DOS status message from a real drive I have connected here:

And it gets part way through, but fails to complete the turn-around to listen:

I recall having this problem before. Anyway, the best way to deal with this is to run the unit tests I built around this -- hopefully they will still work on this new branch, else I will have to fix that first.

... and all the tests fail. Hmmm...

Oddly there don't seem to be any changes that are likely to be to blame for this. So I'll have to dig a bit deeper. The emulated 1541's CPU seems to hit BRK instructions. This happens after the first RTS instruction is encountered. Is it that the RAM of the emulated drive is not behaving correctly? Nope, in the branch separation process, I had messed up the /WRITE line from the CPU, so when the JSR was happening, the return address was not being written to the stack -- and thus when the RTS was read, unhelpful things happened...

Now re-running the tests, and at least some of them are passing. Let's see what the result is in a moment:

==== Summary ======================================================================================================

pass lib.tb_iec_serial.Simulated 1541 runs (24.1 seconds)

pass lib.tb_iec_serial.ATN Sequence with no device gets DEVICE NOT PRESENT (4.2 seconds)

pass lib.tb_iec_serial.Debug RAM can be read (4.2 seconds)

fail lib.tb_iec_serial.ATN Sequence with dummy device succeeds (7.2 seconds)

fail lib.tb_iec_serial.ATN Sequence with VHDL 1541 device succeeds (22.2 seconds)

fail lib.tb_iec_serial.Read from Error Channel (15) of VHDL 1541 device succeeds (23.8 seconds)

fail lib.tb_iec_serial.Write to and read from Command Channel (15) of VHDL 1541 device succeeds (23.2 seconds)

===================================================================================================================

As I've said before, failing tests under simulation is a good thing: It's way faster to fix such problems. I'm suspecting an IEC line plumbing error of some sort.

But for my sanity, I'm going to run the tests on the pre-extraction version of the branch, just to make sure I haven't missed anything obvious -- and it has the same failing tests there. I have a vague memory that the tests started failing once I plumbed in the whole IEC bus logic together -- both the CIAs and the new hardware accelerated interface -- together with the complete emulated 1541.

Let's start with the first failing one, the ATN sequence with a dummy device, as that should also be the easiest to debug, and it seems to me just as likely, that it will be some single fault that is at play, like ATN not releasing, or something like that.

Okay, so the first issue is that in the test cases, the ATN line is not being correctly presented: The dummy device never sees ATN go low. This is, however, weird, because the sole source of the ATN signal in this test framework is from the hardware accelerated interface. And it looks like it is being made visible.

The problem there was that the dummy device was looking at the wrong internal signal for the CLK line, and thus never responded. That's fixed now, but I don't think that's going to fix the problem for the emulated 1541, but I'm expecting it to be something similar.

It looks like the 1541 only thinks it has received 6 bits, rather than 8. Most likely this will be some timing issue in our protocol driver. This is reassuring, as it is a probable cause for the issues we were seeing on real hardware.

Trudging through the 1541 instruction trace, I can see that it sees the CLK line get released, ready to start receiving data bits, and that the 1541 notices this, and then releases the DATA line in response to this. At this point, our hardware IEC driver begin sending bits... but it looks like the 1541 pulls the DATA line low again! Why is this?

So let's trace through the ATN request service routine again, to see where it goes wrong. The entry is at $A85B, which then calls ACPTR ($E959) from $E884 to actually reads the byte. This occurs at instruction #3597 in the 1541 simulation.

Let's go through this methodically:

1. The IEC controller sets ATN=0, CLK=0, and the 1541 is not driving anything, at instruction 3393.

2. The IEC controller is in state 121, which is the place where it waits upto 1ms for the DATA line to be pulled low by the 1541 at instruction, at instruction 3397.

3. The 1541 ROM reaches $E85B, the ATN service routine, at instruction 3597.

4. The 1541 pulls DATA low to acknowledge the ATN request, at instruction 3616.

5. The IEC controller advances to state 122 after waiting 1ms for DATA to go low, at instruction 3724.

6. The 1541 enters the ACPTR routine at $E9C9, at instruction 3729. This is the start of the routine to receive a byte from the IEC bus, and indicates that the CLK low pulse from the IEC controller has been observed.

7. The 1541 releases the DATA line at $E9D7, at instruction 3748. This occurs, because the 1541 ROM sees that the CLK line has been released by the IEC controller.

8. The IEC controller reaches state 129, which is the clock low half of the first data bit, at instruction 3760.

Okay, so at this point, I think we are where we intend to be: ATN and CLK are being asserted by the IEC controller, and the DATA line is not being held by anyone: The 1541 will now be expecting the transmission of the first bit within 255 usec. If it takes longer, then it would be an EOI condition. So let's see what happens next...

The EOI detection is done by checking the VIA timeout flag in $180D bit 6, which if detected, will cause execution to go to $E9F2 -- which is exactly what we see happen at instruction 3760.

It looks like the VIA timer is not ticking at 1MHz, but rather, is ticking down at 40.5MHz. Fixing that gets us further: This test now passes. What about the remaining tests?

==== Summary ======================================================================================================

pass lib.tb_iec_serial.Simulated 1541 runs (23.9 seconds)

pass lib.tb_iec_serial.ATN Sequence with no device gets DEVICE NOT PRESENT (3.9 seconds)

pass lib.tb_iec_serial.Debug RAM can be read (4.1 seconds)

pass lib.tb_iec_serial.ATN Sequence with dummy device succeeds (7.2 seconds)

pass lib.tb_iec_serial.ATN Sequence with VHDL 1541 device succeeds (22.8 seconds)

fail lib.tb_iec_serial.Read from Error Channel (15) of VHDL 1541 device succeeds (29.1 seconds)

fail lib.tb_iec_serial.Write to and read from Command Channel (15) of VHDL 1541 device succeeds (150.6 seconds)

===================================================================================================================

pass 5 of 7

fail 2 of 7

===================================================================================================================

The bold test is the one we just got working again. So that's good. Unfortunately, as we see, the remaining two still fail. That's ok. We'll take a look at the output, and see where they get to, and where they break. I've dug up the program I made before, but had forgotten about, for scanning the test output to give me a summarised annotated version of the test output. Let's see what that has to say about the first of the two failing tests:

It looks like it sends the first byte under ATN to the 1541, but then gets stuck in the turn-around from talker to listener. It gets to state 203 in the IEC controller, where it is waiting for the CLK line to go low, to indicate that the 1541 has accepted the request.

Compared to when I had this working in the previous post, before refactoring a pile of stuff, the main difference I can see is that I changed the delays in this routine, to leave more time after releasing ATN before messing with the other lines. Reverting this got the test passing again. Did it also fix the last test?

And, yes, it did :)

==== Summary ======================================================================================================

pass lib.tb_iec_serial.Simulated 1541 runs (27.9 seconds)

pass lib.tb_iec_serial.ATN Sequence with no device gets DEVICE NOT PRESENT (5.0 seconds)

pass lib.tb_iec_serial.Debug RAM can be read (3.7 seconds)

pass lib.tb_iec_serial.ATN Sequence with dummy device succeeds (6.8 seconds)

pass lib.tb_iec_serial.ATN Sequence with VHDL 1541 device succeeds (19.9 seconds)

pass lib.tb_iec_serial.Read from Error Channel (15) of VHDL 1541 device succeeds (52.2 seconds)

pass lib.tb_iec_serial.Write to and read from Command Channel (15) of VHDL 1541 device succeeds (232.8 seconds)

===================================================================================================================

Okay, so let's synthesise this into a bitstream, and try it out on real hardware.

It works partially: The device detection and request to turn around from talker to listener seems to succeed, but then the attempt to read from the command channel status fails.

I'm suspecting that that sensitivity to timing in the turnaround code in the controller might be revealing that I have something a bit messed up, and that the delays from driving this at low speed in BASIC are causing things to mess up.

So I think it might be worth increasing those timers again so that the turn-around test fails, and then examining why this happens, as it should still work with those longer timeouts. Once I understand that better, and just as likely find and fix some subtle bug with the protocol, that we should be in a better position with this.

Ah -- found the first problem: After sending a byte under ATN, it was releasing both ATN, and also CLK, which allows the 1541 to immediately start the count-down for detecting an EOI event. This explains what I saw with the real hardware test. I could sharpen the tests involving turn-around by adding a delay of 1ms after sending a byte under ATN, so that it would trip up.

Anyway, fixing that doesn't cause any of the existing tests to fail, however, my test to talk to a real 1541 from BASIC using the new IEC controller still fails when trying to read the DOS status message back. I'm still assuming that the problem is with handling the turn-around from talker to listener, so I think I'll have to add that extra test with a delay to allow the simulated 1541 to hopefully get confused, like the real drive does.

I added a test for this, but it still passes the simulation test, but fails with a real drive. That's annoying. I am now writing a little test program in assembly to run from C64 mode with the CPU at full speed, to try to talk to the 1541. In the process of doing that, I realised that the IEC controller does not release the IEC lines on soft-reset, which makes testing a pain, as the C65-mode ROM has an auto-boot function from IEC device, that is triggered by one of the IEC lines being low. So I'm fixing that, while I work on this little test program.

While that is synthesising, I've been playing around more with my test program, and it is getting stuck waiting for the IEC ready flag to get asserted. With that clue, I have fixed a couple of bugs where the busy flag wasn't clearing, which might in fact be the cause of the errors we are seeing. That is, it might be communicating with the 1541 correctly, but not reporting back to the processor that it is ready for the next IEC command.

It is weird that this is not showing up in the simulation tests.

With a bit more digging, on real hardware, the busy flag bug only seems to happen with the IEC turn-around command ($35). More oddly, it doesn't seem to happen immediately: Rather, the busy flag seems to be cleared initially, and only after a while, does it get set.

Well, now I have encountered something really weird: Issuing a command from C64 mode to the IEC controller, even via the serial monitor interface results in the IEC controller busy flag being stuck. But if the machine is in C65 mode, then this doesn't happen. For example, if the MEGA65 is sitting at the BASIC65 ready prompt, I can do the following in the serial monitor:

.mffd3697

:0FFD3697:20000080000020000F80051000000FF

.sffd3699 48

.sffd3698 30

.mffd3697

:0FFD3697:200000980000020000F80051000000FF

I have highlit the register that contains the busy/ready flag. A value of $20 = ready, while $00 = busy.

Now if I issue exactly the same from C64 mode, i.e., just after typing GO64 into BASIC65:

.mffd3697

:0FFD3697:202000980000020000F80051000000FF

.sffd3699 48

.sffd3698 30

.mffd3697

:0FFD3697:002000080000020000F80051000000FF

We can see that this time, it stays stuck in the busy state. Curiously bit 5 of $D698 seems to get magically set in C64 mode. That's the SRQ sense line. This would seem to suggest that the SRQ line is stuck low. That's driven by one of the CIAs on the C65, so it shouldn't matter. But clearly it is messing something up.

Time to add a simulation based test, where I set the SRQ line in this way, and see what happens, i.e., whether I can reproduce the error or not.

And it does! Yay! It turns out my C128 fast serial protocol detection was a bit over-zealous: If SRQ is low, it assumes fast protocol, instead of looking for a negative going SRQ edge. Fixing that gets the test to pass. So synthesising a fresh build including this.

And it works!

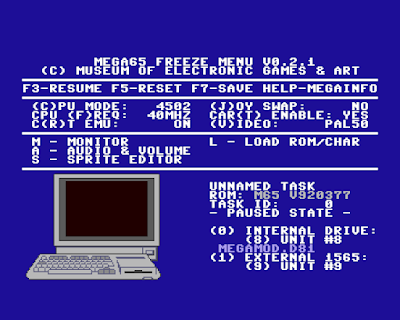

With my test program slightly modified, like this:

we get a result like this:

we get a result like this:

Yay! Finally it is working for normal IEC serial protocol. I'm going to call this post here, because it's already way too long, and continue work on JiffyDOS and the C128 fast serial protocols in a follow-on post.