In the last episode, we got the composite video output of the MEGA65's expansion board working -- but only in monochrome. Through trickery, we were getting more than 16 shades of grey out of our 4-bit DAC (and we have further Cunning Plans :tm: to extract even more, but that's likely to be described in another blog post). But what we really want right now, is working colour. For both NTSC and PAL.

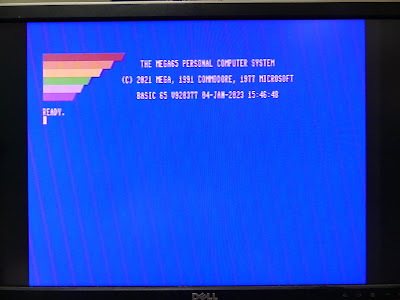

In this post, I manage to get PAL non-interlaced composite video working with pretty decent colour signalling, producing images like this:

First step, get those colour bursts in the raster lines where they need to go, and only in the raster lines where they should be, and with the correct phase. The book Video Demystified continues to be my able assistant here, with lots of deep technical information. The book can be easily found and purchased via online search.

The colour burst pulse should be 9 +/- 1 cycle at the appropriate frequency. I setup the mechanics for generating that in the previous blog post, but didn't actually hook it up, so I'll do that now, and see if we can't get that sorted fairly quickly. Interestingly, according to this image, the C64 had a much longer colour burst, presumably because it can only help the receiving monitor to have more cycles to lock onto the phase of. It also displayed an invisible white pixel on the left edge, presumably to help monitors calibrate the luminance more stably.

raster15khz_skip is conveniently counting down the 108 pixel ticks from the start of the HSYNC pulse. That covers a total of 12 usec, so we can just check for about the half-way point, and then add the colour burst signal.

We have to scale the colour burst down to a range of +/- 37 instead of +/- 127. I'm willing to bet that a simple divide by 4 to make it +/- 32 will work fine. We also need to synchronise the colour burst to start at $00 or $80 hexadegrees (see my previous post for a bit more discussion on the hexadegree system of 256 = $100 hexadegrees in a circle).

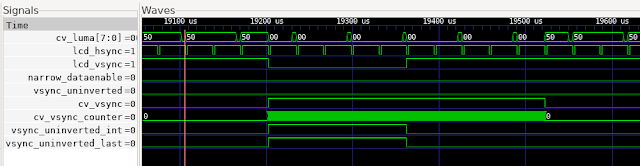

Okay, I have added logic to clamp the phase at the start of the colour burst, and then to selectively super-impose it onto the luma signal after the sync pulse. It looks something like this:

The wiggly lines are the colour burst. What is important, is that it starts from the centre line, rather than some random point on every raster line. This is right, so that's good. I reckon I can start the burst earlier, and have more cycles, like the C64 does. I can also slightly tweak the end time, so that it always ends on a zero-crossing, rather than having a glitch. Both of those are now done.

So in theory if we now modulate the colour components onto the colour burst signal, we should see colours. And this is where it gets fun. We will focus on PAL first. The luma signal is actually already a combination of the red, green and blue channels. This is called Y. Then to get all three, we need to have U and V, which are other combinations of the red, green and blue. This structure is based on the historical development of colour TV as an overlay onto the existing black and white TV signals, combined with some clever insight into the sensitivity of human vision to different colours.

What matters for us right now, is the equations for U and V. These should be gamma corrected first, but I'm going to leave that for now. It just means that the colour saturation curves will be a bit wrong. Gamma correction can be added in later, essentially just using a 256 entry look-up table that has the gamma corrected values, and which is pre-applied to the red, green and blue channels.

But back to those equations:

Y = 0.299R + 0.587G + 0.114B

U = – 0.147R – 0.289G + 0.436B = 0.492 (B – Y)

V = 0.615R – 0.515G – 0.100B = 0.877(R´ – Y)

Since Y is already transmitted as luma, which is mostly the green channel, with a bit of red and a bit of blue, the U and V signals are the differences between the red and blue channels and Y. Note that this means that U and/or V can be negative at any point in time.

The U and V signals are then modulated with the colour burst signal: U with the sine of the current colour burst phase, and V with the cosine of the colour burst phase. This means that they are encoded "90 degrees apart", using what is called "I/Q" or "quadrature" coding. There is a lot of clever-pants signal processing that can be done with I/Q signals to extract the I and the Q parts back out at the other end. Fortunately we don't have to separate them, but just combine them, which we just do with simple addition.

The literature indicates that the modulated U and V signals should then be "saturation limited", so that if the magnitude of the values are too great, that they get clipped, rather than wrapped. This makes sense, as it just limits the red and blue aspects of the signals.

We will need a bit of a pipeline to generate U and V, scale them, and then modulate and saturation limit them. The Y signal will need to be similarly delayed so that the components all stay in phase.

Ok, I now have the colour signal super-imposed over the luma signal. However, I think the amplitude is too large, so I'll try cutting it in half. This is what I am seeing:

The checkered pattern is the direct result of the over-amplitude chroma signal, as far as I can tell. What is interesting, and I don't really know why it is happening, i how the parts of the display that are being displayed more or less correctly, seem to be getting dithered.

Now, the quadrants of the image that are being displayed properly are those that have blue on full, and more red than green. Not yet sure what clues that gives me. Let's look at the chroma amplitude first, anyway.

This is how it looks at the moment, when we look at the raw signal:

We can see that the amplitude is much greater than in the colour burst itself. That should be fairly easy to fix.

Also, the bandwidth of the chroma signal is also way too high, as evidenced by the very jagged nature of the signal. This is a problem, because the chroma signal is only allowed to occupy ~1MHz of bandwidth. I'll likely need some kind of low-pass filter to solve this problem. I'll have a think about that while I fix the amplitude.

That's looking a lot more sensible to me. Let's hope it fixes it. Even if the bandwidth is bad now, the vertical colour bars are wide enough that they show up as areas with constant phase, and thus very narrow bandwidth, and they really should show up in colour. So I'll synthesise that, while I think more about limiting the chroma bandwidth more generally.One of the things that has confused me about the chroma signal for PAL and NTSC, is what the actual horizontal colour resolution really is. As the carrier is ~4MHz, it can't be higher than that. In fact, it probably has to be somewhat less than that, probably of the order of 2MHz or so. Compared to our ~13.5MHz clock, that's close to one character wide.

In fact, if we remember back to the C65's composite output, that's probably close to right: Trying to display, for example, a red character on a blue background resulted in unreadable rubbish, because at the ~8MHz pixel clock of the C64, it couldn't change between red and blue every pixel, because the result would be above the colour carrier. Whole characters could be alternately coloured, however, without much trouble. So it's probably about right. It will be interesting to see if we get that kind of problem with the corrected colour amplitude, or if the monitor still refuses to show it in colour.

Still no colour, but the stippled pattern is less pronounced. My best guess at the moment is that it is Chroma Dots caused by the colour signal not being decoded, because the TV is treating it as a monochrome signal still.

Ah, there is a good clue in here. Apparently you _don't_ reset the phase of the colour carrier every raster, but leave it running, exactly as elpuri describes "despite what all those nice diagrams always show". And that has certainly helped! The monitor now clearly thinks that it is dealing with a colour image, even if things are rather broken:

The first image is of the PAL display:

With NTSC it looks like I am encoding red as green. The upper right triangles of the middle section are actually not far off the correct blue gradient that is expected here.

Thinking about it, this test pattern has really quite chroma low bandwidth requirements, because the colours largely transition gradually. So I should be able to get it working here, without having to fluff about with low-pass filtering the chroma signal. That can be dealt with later.

I'm tempted to remove one of the U or V channels temporarily to simplify the debugging. It would also be great at this point to be able to access a "correct" composite display of the test pattern, so that I could compare it. I was hoping my VP-728 upscaler might have composite outputs, but it doesn't.

So I'll start by synthesising with just U, and no V component. I might also just try outputing the RGB as YUV values directly, since that should generate a valid image, just with messed up colour space. If that still has non-coloured sections, then I will know that there is something else messed up.

Okay, so with either U or V disabled, I am indeed getting less colouring.

Hmmm.. I've reworked the sine table lookup stuff a couple of times, and I'm now more happy that that is doing what it should. PAL colours are still messed up, and missing from some areas, especially where red dominates the colour. NTSC colour encoding is still quite incomplete, as I am basically using PAL encoding in all modes right now. But it's progress.

Now it's time to look at all those PAL phase changes, and check how I am going with that. I know that I am not inverting the phases each field, which is causing the colours to switch between two different rotations of the colour wheel. So that's the first one to fix, I think.

The first thing to tackle is that the phase of the V component is supposed to alternate every other line. With that in place, it's "less bad", but not perfect, as I still haven't implemented the inter-frame correction.

There are also still large areas that are lacking colour. Investigating this, I have found that green coloured areas are resulting in rubbish colour information. A single pure colour should generate a similarly pure sine wave with the correct phase that indicates its hue. But instead, I'm seeing choppy rubbish like this:

The nice sine wave is the colour burst at the start of the raster line. Then the choppy stuff is all supposed to be pure green (RGB = #00FF00). Pure red doesn't have this problem.

Now, one of the interesting things with the quadrature encoding method that PAL and NTSC use, is that a mixture of a pair of sine and cosine waves with the same frequency and kept in phase, will generate a signal of variable amplitude and phase, but at the same frequency. Thus for a region with constant colour, regardless of the colour, we should see a nice continuous sine wave. Not this kind of choppy rubbish.

Disabling either U or V colour information resolves it -- not surprising since it will result in either a pure sine or pure cosine component from the remaining signal.

Ah! I found the problem: When combining the U and V signals after multiplying them by the sine and cosine table entries, it is possible for the result to overflow the variable I had defined. By adding an extra bit of precision, that problem has now gone away. I'm not sure that the resulting amplitude is now high enough, but that is something fairly easy to fix later, if the colours are undersaturated (which is how that problem would manifest).

I'm synthesising a test bitstream for this now, and am quietly hopeful that it will fix a lot of the rubbish I was seeing, because a lot of it can be explained by pseudo-random patterns moving from the colour space to luma space -- which is exactly what would happen if it was saturating and then wrapping around instead of clipping.

And indeed that has helped: There are now colours that are still wrong, but there is no longer spotty rubbish caused by random looking colour subcarrier wave-forms. Here is how the PAL test pattern looks now:

Now I think the most fruitful thing to attack will be the correct handing of the phase inversion that is causing the colour to still flicker between two different hues all the time. This unfortunately can't be seen in the photo, because of the short shutter time. Figures 8.16a and 8.16b in Video Demystified has the information we need here, so I'll progressively implement it.

I'll start with fake-progressive, as that has by far the simplest arrangement.

In the process I found I was setting the PAL colour burst frequency using degrees instead of hexadegrees, which mean that it was using funny angles: 135 hexadegrees = 190 degrees and 225 hexadegrees = 316 degrees, giving an angle between them of 126 degrees, instead of 90 degrees, and likely to be causing all sorts of problems. Let's see if that improves things. Not noticeably, except that it removes the need for some fudge factors I had previously added, so that's a bonus. I also noticed I have -V on the wrong half of raster lines, so fixing that, too.

But now back to getting that sequence of suppressed colour bursts and phase inversions at the start of each field.

Hunting around the internet for tools to make the debugging of the video more efficient, I found cvbs-decode, which can decode raw PAL/NTSC video (but I can't get it to run, due to some weird python errors), and also the HackTV PAL/NTSC video generator for HackRF written in C.

The HackTV stuff is essentially an implementation of what I want to do, just in another programming language. Thus it could be a good place to mine for clues on what I am doing wrong. The code is structured very abstractly, however, so that might take me a while.

Coming back around to the parts of the test pattern that lack any colour at all, I am looking at the waveforms I am producing for those, and I can actually see that there is in fact no colour subcarrier visible for that part of the image. That's at least one thing that I can tackle with the tools I have already created, so I'll bash against that for a while.

The main area that is showing no colour is the triangle that has more red than green, and has blue at full value. If there is more green than red, then it seems to be ok. Looking closer, there is a colour signal, but the amplitude is really low. I had to reduce the colour amplitude by 2x while implementing it, to prevent over-flows. It's possible that I have over-attenuated it.

Meanwhile, to try to improve my workflow, I have improved my little trace program so that as well as a PDF with oscilloscope like traces, it now also produces an HSYNC-synchronised PNG view, effectively showing the whole video frame, including SYNC pulses (as black). The PAL colour information will appear as dot/stripe patterns, as it would on a black-and-white TV. After fixing a few bugs that it helped me to find, this is what I see for the PAL interlaced video:

You will probably need to click on the image to view full-size, as it has a fairly horrible aspect ratio.

One thing about showing the colour information directly without decoding, it means we can by eye see the colour intensity by how bright the pattern is. Here is a zoom-in of part of it:

Here we can see two regions with quite high colour saturation, and between them in the lower-right area, a section that still has some colour information, but the pattern is quite faint, meaning that the colour intensity is very low. This is the area that should be purple. This clearly means that we have some problem with the YUV generation, and explains the lack of colour on the PAL monitor, because it really isn't there, or rather, is so faint, as to be effectively invisible.

One of the colours that is having this problem is #5800FF. Let's do the YUV calculation by hand, and see how we think it should show up.

Let's apply the YUV calculation to this colour:

Y = 0.212R´ + 0.700G´ + 0.086B´ = 0.0482 + 0 + 0.086 = 0.134

U = 0.492(B´ – Y) = 0.492 x 0.866 = 0.426

V = 0.877(R´ – Y) = 0.877 x (0.345 - 0.134) = 0.185

Scale these up to 8-bit range, and we get:

Y = 34 = $22

U = 109 = $6E

V = 47 = $2F

Hmm... The values I have in the VHDL are nothing like that. Y itself is quite different, for a start. Now, there are two ways to calculate U and V: The method above, or by applying direct RGB coefficients:

Y = 0.299R´ + 0.587G´ + 0.114B´

U = – 0.147R´ – 0.289G´ + 0.436B´ = 0.492 (B´ – Y)

V = 0.615R´ – 0.515G´ – 0.100B´ = 0.877(R´ – Y)

Hmm, these coefficients are different again for Y, that I got from a different part of the document. They do seem to match what I am using in the VHDL, though, so let's start by checking those.

Y= 0.103 + 0 + 0.114 = 0.217

U = -0.051 - 0 + 0.436 = 0.385

V = 0.212 - 0 - 0.1 = 0.112

Scaled up, we get:

Y = 55 = $37

U = 98 = $62

V = 54 = $36

The VHDL is producing:

Y = $1D

U = $10

V = $04

In short, I have something very wrong going on with my YUV calculations in the VHDL, because the ratios between these values are not correct. It's a little more complicated to debug here, because the VHDL doesn't do x256 scaling, because it reserves part of the 8-bit range of the DAC for SYNC and head-room for colour coding. Thus I calculate Y with a different scaling factor compared with U and V. That said, U and V are calculated with the same scale factor, so the ratio between those two should hold. So lets look more closely at those:

U = – 0.147R´ – 0.289G´ + 0.436B´ = 0.492 (B´ – Y)

V = 0.615R´ – 0.515G´ – 0.100B´ = 0.877(R´ – Y)

For U, the VHDL does: U = -6 R - 12 G + 18 B. Those ratios look ok.

For V, the VHDL does: V = 18R - 15G - 3B. Again, those ratios look ok.

So where have I messed up? Let me double-check the direct calculations:

U = – 0.147R´ – 0.289G´ + 0.436B´

U = -0.147x88 - 0.289x0 + 0.436 x 255

U = -12.936 - 0 + 111

U = 98

So U looks ok. Now to V:

V = 0.615R´ – 0.515G´ – 0.100B´

V = 54 - 0 - 26 = 28

Ah, so I made an error in my calculations above (which I marked with the underline).

So in fact the ratio of U and V is about right.

This makes me then suspect that the colour saturation is too low, i.e., that we need to scale up the chroma signal. But it already has quite a bit of amplitude for some of the other colours. So maybe I should implement the gamma correction first, instead.

PAL should use a gamma correction of 2.8. There are plenty of online gamma curve calculators that can be used to generate a suitable table. Although the NTSC/PAL preferred curve is a little more complex:

For R, G, B < 0.018

R´ = 4.5 R

G´ = 4.5 G

B´ = 4.5 B

For R, G, B ≥ 0.018

R´ = 1.099 R^0.45 – 0.099

G´ = 1.099 G^0.45 – 0.099

B´ = 1.099 B^0.45 – 0.099

Gamma is now implemented. I'll synthesise a bitstream to see how it looks. But what I can already tell is that it hasn't fixed the lack of saturation of the purpley areas in the simulation. So there is still other problem. But it's nice to have at least one of issue eliminated.

Looking at the specifications for NTSC and PAL video again, I think I have the colour burst amplitude too high, which causes the monitor to interpret the colour saturation as being too low. Currently it has a peak to trough amplitude of 64 = $40 via 3 bit shifts of the sine table. But it is supposed to only rise or fall by 1/2 of sync voltage. With our scaling, that means it should be +/- $18, for a range of $30. So it

will be contributing to the problem, but is probably not enough to be the root cause. I'll fix it, anyway.

Meanwhile, looking at the images on the monitor instead of simulation again, the colour alternation flicker is annoying me a lot. So I am going to try a fix for that: I am suspecting that it is the phase alternation polarity should be switched every field -- although I cannot yet find clear information on this.

I've also introduced some sort of regression into the NTSC image, which I need to deal with. Ah, found that. Also affects PAL when interlace is enabled. I was a bit over-zealeous in marking the active vs non active area, and was marking some of the active area as inactive, causing it to blank.

Resynthesising again... That's fixed the regression.

I think my next step to track down the lack of chroma intensity when R > G, and B = full. I'll attack this by making a simple program in C that does the same YUV conversion, to see if I haven't just messed something up in the VHDL calculation.

Here is my hand-calculated Y, U and V panels for this pattern:

The V panel is showing much the same problem -- so this is good -- it means I have misunderstood something about the process of calculating these values. I'm guessing I'm not handling negative values properly.

U and V have ranges centred around zero, So this kind of sharp transition can appear. By correcting this in the C program, I am able to get much saner looking panels:

Much better, with no nasty lines going through. Also, it makes sense the way that U and V have peaks in particular corners, as U is basically the "missing blue" and V the "missing red". As the pattern I am rendering here has increasing red as we go down the image, it makes sense that the missing blue component will decrease a bit (U, in the middle), while the missing red component (V, on the right) will increase.So now to figure out what I have wrong in the VHDL when handling U and V. The values I am generating are signed. So I suspect that when they go negative, the multiplication is ending up flipped, and for some reason the sign bit is not being interpreted properly.

Okay, that seems to have helped: Now when I simulate the VHDL, I am seeing a much more even colour intensity. That said, it is also quite faint, as you can see here below how the chroma oscillation pattern (the angled bars) have very low intensity:

In comparison, the colour burst that tells the monitor how bright they should be for maximum colour saturation is much more intense:

So I have one or the other not in the correct amplitude.

The correct amplitude variation is +/- half of the sync level, i.e., +/- 24.

The colour burst part is indeed too intense. The generation of this is a bit fiddly, because of how I do a pile of bit-shifts to avoid multiplications. But the luma_drive signal is generated as a 10 bit value, and the colour burst is added onto that. This means we want an amplitude of +/- 24*4 = +/- 96, since the range is 10 bit instead of 8 bit. I think I have that right now.

As for the U and V signals, those I don't think are scaled the same, so will need to be fixed.

Right, I think I have fixed the scaling of U and V, and the result looks much more promising now:

The intensity of the colour burst and of the chroma in the active area look reasonably well matched now. I'll take a look at the oscilloscope view to be sure:

If anything now, I reckon the colour burst might be slightly lower amplitude than the pixel data. But that should be fine. Let's try making a test bitstream from that, and seeing how it looks:

Okay, that is stacks better. There are still problems, but we do at least now have fairly consistent colour saturation. It's still flickering between different hues, especially in the left most panels, though, and of course the hues are not correct. The four narrower bars are supposed to be grey, red, green and blue, which they clearly aren't. That said, the green and blue ones are kinda the right hue. In fact, I would say that in general, it is red that has the most problems in the whole image.

Red in PAL comes mostly from the V signal, which is supposed to alternate phase every raster line. Thus it seems quite plausible that there is something wrong with that logic.

Ah, found a very likely cause: The colour burst signal is also required to swing by 90 degrees between normal and inverted V signal lines, as described here. I was adjusting the phase of the pixel data colour, rather than of the phase of the colour burst. This was likely causing the problems. Rearranging that now, so that it does it the right way, and hopefully we will see stable colour in PAL -- even if the red channel is still not quite right (there is a 50% chance I have it inverted).

Look like I got it the right way around:

It's not perfect, as red is still under-saturated compare with blue, which I can improve. Also green is a bit under-saturated now, too. But it is a million times better than before. It is recognisably a colour PAL display. The non-linearity in the DACs is probably now as much of the problem as anything else, and might in particular be responsible for the uneven colour saturation in places.

But, let's see how a MEGA65 bitstream looks with it:

Not, bad, I have to say :) I wasn't sure that 80 columns with colour would still be readable. That said, the camera has corrected what is a very real purple tint on the display. My crappy phone camera does a fair job at reproducing the tint, though:

While I'm running things, let's see how C64 mode / 40 columns looks:

I have to say, that this looks pretty darn good, apart from the purple tint. It is absolutely clearer than using the composite output from a C64. In fact, you can even do red text on blue background, that normally disappears into soup on a real C64:

The good camera has again desaturated the red a bit. The text is actually much more coloured. Again, out with the crappy phone camera to see how it really looks:

Well, how it looks in terms of colour, because my phone camera has horrible focus. The other camera correctly captures the sharpness, though.

So that's all good. The only problem now is that the interlaced mode of PAL now has colour instability. This is odd, as it was previously the other way around. Ah, no, I only thought it was the other way around ;) It is indeed consistent. That makes it much easier to investigate and fix.

But this blog post is now more than long enough, so I'm going to wrap it up here.

What I will say, though, is the VHDL part of this is now more or less all there, and with support, should be quite feasible for someone to contribute to, rather than myself continuing on it ahead of other competing priorities. So if you have an interest in composite video output (you don't need to know anything about how it works up-front), and would like to learn some VHDL and contribute to this feature on the MEGA65, drop in on the MEGA65 discord server (link from https://mega65.org).